Mistral, the French AI startup backed by Microsoft and valued at $6 billion, has released its first generative AI model for coding, dubbed Codestral.

Like other code-generating models, Codestral is designed to help developers write and interact with code. It was trained on over 80 programming languages, including Python, Java, C++ and JavaScript, explains Mistral in a blog post. Codestral can complete coding functions, write tests and “fill in” partial code, as well as answer questions about a codebase in English.

Mistral describes the model as “open,” but that’s up for debate. The startup’s license prohibits the use of Codestral and its outputs for any commercial activities. There’s a carve-out for “development,” but even that has caveats: The license goes on to explicitly ban “any internal usage by employees in the context of the company’s business activities.”

The reason could be that Codestral was trained partly on copyrighted content. Mistral didn’t confirm or deny this in the blog post, but it wouldn’t be surprising; there’s evidence that the startup’s previous training datasets contained copyrighted data.

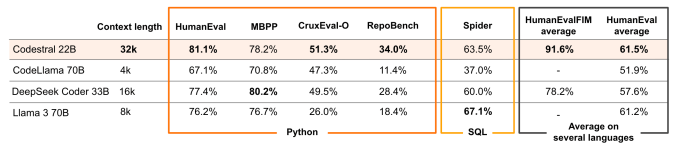

Codestral might not be worth the trouble, in any case. At 22 billion parameters, the model requires a beefy PC in order to run. (Parameters essentially define the skill of an AI model on a problem, like analyzing and generating text.) And while it beats the competition according to some benchmarks (which, as we know, are unreliable), it’s hardly a blowout.

While impractical for most developers and incremental in terms of performance improvements, Codestral is sure to fuel the debate over the wisdom of relying on code-generating models as programming assistants.

Developers are certainly embracing generative AI tools for at least some coding tasks. In a Stack Overflow poll from June 2023, 44% of developers said that they use AI tools in their development process now while 26% plan to soon. Yet these tools have obvious flaws.

An analysis of more than 150 million lines of code committed to project repos over the past several years by GitClear found that generative AI dev tools are resulting in more mistaken code being pushed to codebases. Elsewhere, security researchers have warned that such tools can amplify existing bugs and security issues in software projects; over half of the answers OpenAI’s ChatGPT gives to programming questions are wrong, according to a study from Purdue.

That won’t stop companies like Mistral and others from attempting to monetize (and gain mindshare with) their models. This morning, Mistral launched a hosted version of Codestral on its Le Chat conversational AI platform as well as its paid API. Mistral says it’s also worked to build Codestral into app frameworks and development environments like LlamaIndex, LangChain, Continue.dev and Tabnine.