Within this section, we present our conceptual framework for interoperability, provide an overview of the practical implementation details of our PoC, and describe the evaluation of our PoC applied in a feasibility study with real-world patient data. Our PoC is applied to a study within the Leuko-Expert project funded by the German Ministry of Health. The objective of the project is to develop an expert system to aid in the diagnosis of the rare disease (RD) leukodystrophy, a genetic disorder that affects the brain and causes movement and sensory perception disturbances29. The project involves clinical and genetic data that has been collected and generated by the clinics in Aachen, Leipzig, and Tübingen (see Fig. 1) in Germany. In detail, the patient data is collected from two reference centers specialized in both childhood and adult variants of leukodystrophies, located at the University Hospitals of Tübingen and Leipzig. Furthermore, data is also collected from patients who received differential diagnoses at the University Hospital Aachen. Regarding data provision, the project relies on the data integration centers (DICs – https://www.medizininformatik-initiative.de/en/consortia/data-integration-centres) at the corresponding university hospitals that are part of the MII and facilitate external access to the data. The patient data used in this study is subject to privacy and ethical considerations and, as such, is not publicly available. Access to the data is restricted to protect patient confidentiality and comply with ethical guidelines. Data access will be provided upon request, taking into account the data-sharing infrastructure. To apply for data access, we refer to the Research Data Portal for Health (https://forschen-fuer-gesundheit.de, currently available only in German) or contact the DICs directly in Aachen, Leipzig, and Tübingen.

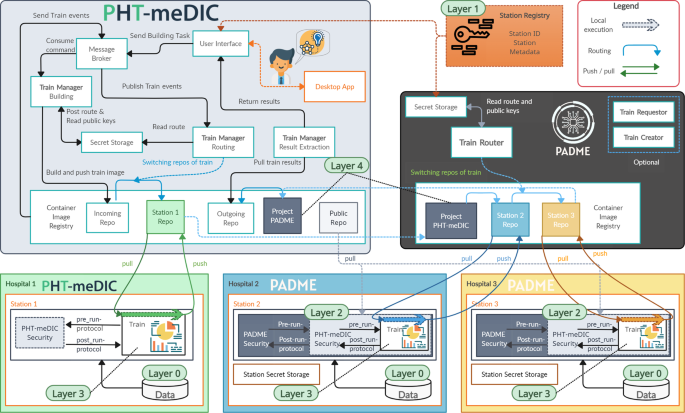

Deployment of stations within the Leuko-Expert project. Leipzig and Aachen use a PADME station, whereas Tübingen utilizes a PHT-meDIC station. In Layer 0 (see Fig. 2), a Research Electronic Data Capture (REDCap) system is implemented at each institution to facilitate data provision. Since two PHT implementations are present, their interoperability might be desirable to enlarge the global dataset.

Our designed analysis ‘Data Discovery’ encompasses the execution of a train exchanged between the infrastructures PADME and PHT-meDIC. In the Leuko-Expert scenario, the interoperability between these infrastructures to analyze more data becomes desirable, since two DICs have deployed PADME and one has deployed PHT-meDIC (see Fig. 1). For exact implementation details, see our supplementary materials30. Note that the purpose of the feasibility study performed is to assess the practicability of our PoC and the selected analyses are arbitrary. Further, note that when we refer to interoperability, we are using it synonymously with technical interoperability or horizontal interoperability.

Conceptual framework for interoperability

Among our key outcomes was the formulation of five distinct layers to achieve PHT interoperability. This layered methodology draws inspiration from the ‘Layers of Interoperability’ by Benson et al.19. Our layers have been derived from the review in the Methods section and their relationship is visualized in Fig. 2 and Fig. 3. The five layers can be summarized as follows:

-

Layer 0 – Data integration. The foundational step in our multi-layered approach involves harmonizing data across different infrastructures. This layer focuses on aligning and integrating the different data formats, structures, and standards from various data sources into a unified format such that it can be seamlessly processed by the analysis train.

-

Layer 1 – Assigning (globally unique) identifiers to stations. In order to transfer trains between infrastructures, it is necessary to establish a method for identifying the station unambiguously across infrastructural borders. This is essential to ensure the correct routing of trains between the infrastructures and stations.

-

Layer 2 – Harmonizing the security protocols. The PHT infrastructures were developed with different requirements regarding the security protocols and the encryption of the train. Therefore, we formulate an overarching security protocol that aligns with infrastructure-specific requirements.

-

Layer 3 – Common metadata exchange schema. By employing distinctive station identifiers (Layer 1), we establish the initial building block of a shared communication standard. As the security protocol also requires metadata for proper functioning (e.g., exchange of public keys), our third objective is to create a common set of metadata that facilitates technical interoperability and also extends to a first foundation for semantic compatibility. This layer primarily merges the metadata items from Layers 1 and 2 into a machine-readable format.

-

Layer 4 – Overarching business logic. After we have established all the preliminaries mentioned above, we need to develop the actual business logic to transfer trains between the infrastructures from a technical perspective based on the route defined by the identifiers (Layer 1).

Our multi-layered framework for interoperability: From data integration to business logic. In our interoperability framework, Layer 0 is associated with the data level. The harmonization of station types, such as PADME or PHT-meDIC, is addressed in Layers 1 and 2, and to some extent in Layer 3. The overarching business logic is encapsulated within Layer 4 at the infrastructure level. The arrows illustrate the interdependencies and collaborative interactions across the layers: Layer 4 utilizes the metadata established by Layer 3 to navigate the trains through the infrastructures. Layer 3 consolidates the metadata produced by Layers 2 and 1. Layer 2 then uses the unique station identifiers to secure the trains accordingly.

Our concept for the train transfer between PADME and PHT-meDIC. Each ecosystem has a dedicated interface that can receive trains. After the train of another ecosystem arrived, it is ‘reloaded’ into an ecosystem-specific and -compatible train (Layer 4). This can be interpreted as a ‘transfer station’ in the real-world, where cargo is reloaded from one train to another. Since each ecosystem adheres to its specific security protocol and the reloaded train contents are encrypted according to this protocol, we modularized each security protocol and make it available for the other ecosystem and vice versa. This is then used at the stations in order to decrypt (Layer 2) the contents – see Fig. 4 – using the metadata (Layer 3) attached to the train. The stations can be accessed through their unique station identifier (Layer 1).

In the following, we describe each of the layers in more detail.

Layer 0 – Data integration

Conceptualization

In our scenario, the challenge lies in integrating three decentralized datasets from different institutions. Each institution houses its raw data in various clinical application systems. For example, as mentioned above, the data provided by Tübingen and Leipzig is highly specialized with respect to both childhood and adult variants of leukodystrophies, while Aachen only provides data on differential diagnoses. This data is unstructured and varies significantly in volume, format, and content, posing a significant challenge for standardization and integration. Therefore, a central data repository is needed in each institution that consolidates this diverse data in a more structured and accessible format. A key aspect of this integration process is the definition and implementation of a mutual data schema, such as the German MII Core Data Set (https://www.medizininformatik-initiative.de/en/medical-informatics-initiatives-core-data-set). This schema provides a standardized structure for the data, ensuring consistency and facilitating interoperability among the different institutions at the data level. Be aware that the design of such an ETL (Extract, Transform, Load) pipeline can vary widely, influenced by factors such as the nature of the data, the chosen data schema, and the specific technologies for data provision. In the subsequent section, we will briefly outline our specific strategy to harmonize the distributed data on leukodystrophy.

PoC implementation

In order to harmonize the data and make it analysis-ready, we store the data in a Research Electronic Data Capture (REDCap – https://www.project-redcap.org/) database system. REDCap is essentially designed for data collection and management in research studies and clinical trials and, therefore, suitable for our purpose31,32. At this point, we acknowledge that other technologies like Fast Healthcare Interoperability Resources (FHIR) could also facilitate data provision. However, in our scenario, REDCap was pre-installed at all participating sites and the medical teams were already familiar with this tool. For these reasons, we opted to use REDCap for our PoC. Each participating institution received a pre-compiled REDCap questionnaire, establishing a data schema to collect, structure, and standardize patient data across all institutions. The process of filling out the questionnaires for each patient has been performed manually or by semi-automatic means by the clinical teams in each hospital. The results of the REDCap questionnaire, which we refer to as the dataset later, is divided into three distinct sections:

-

Baseline: Master data that contains patient details such as sex, age, and diagnosis.

-

Examination: Data that provides information regarding the patient’s health status. This includes, among others, attributes such as abnormalities in higher brain functions or loss of libido that are defined in the Human Phenotype Ontology (HPO)33. The answers to the questions within this section encompass a wide spectrum of response types, spanning from yes/no/unknown options to open text fields.

-

Genetics: Data that refers to genetics and serves as a documentation of the results obtained from genetic testing. This section includes details such as the observation year, the specific affected gene, and other attributes related to genes, such as the classification of the American College of Medical Genetics (ACMG) or the underlying mutation in the nomenclature of the Human Genome Variation Society (HGVS)34,35.

While the baseline section is mandatory for each patient, the others are optional and can consist of multiple instances (i.e., multiple examinations). In the final step, after all patient data instances have been entered into the REDCap system at each DIC, the REDCap database has been made accessible to the station software located at the respective DIC (see Fig. 1).

After the establishment of the data provision for the stations Aachen, Leipzig, and Tübingen, we need to move a layer up to ensure that the stations are accessible and can pull the trains. This includes assigning unique identifiers to the stations to provide a clear and unambiguous destination for the analysis train.

Layer 1 – Assigning (globally unique) identifiers to stations

Conceptualization

In this layer, we address the disambiguation of the stations within a PHT ecosystem, especially during the route selection process. Up until now, the infrastructures have been using their own custom station identifiers, creating a potential risk of ambiguities due to the absence of a shared agreement on these identifiers between different infrastructures. Furthermore, the lack of global identifiers prevents one from choosing stations that belong to a different ecosystem for the train route. Therefore, we employ an additional authority on top of the infrastructures that acts as an indexing or directory service. In general, this approach is inspired by the well-established Domain Name System (DNS), which manages the namespace of the Internet36,37. Establishing such a namespace for the stations is our main objective, such that we can select stations for the train route and support the routing through our business logic (see Layer 4). In essence, we require that each station’s identifier must be a Uniform Resource Locator (URL). This URL represents the destination where the train is to be directed, enabling its execution at that particular station.

PoC implementation

Taking inspiration from DNS servers, we use the Station Registry (SR – https://station-registry.de/) as a component that serves as a central database for stations. Note that the SR has already been partially introduced in the work by Welten et al.38. Station administrators must register their stations at the SR before or after the installation and specify the affiliation of the station, e.g., in our case, either as a PADME or a PHT-meDIC station. Similarly to DNS, we have chosen a basic hierarchical structure for our station identifiers:

$$\mathop{\underbrace{{\rm{https}}://{\rm{station}}\mbox{–}{\rm{registry}}.{\rm{de}}/}}\limits_{{\rm{authority}}}\mathop{\underbrace{{\rm{PADME}}/}}\limits_{{\rm{affiliation}}}\mathop{\underbrace{{\rm{d7b0a9a7}}\mbox{–}07{\rm{fd}}\mbox{–}{\rm{4a31}}\mbox{–}{\rm{b93a}}\mbox{–}{\rm{3c946dc82667}}}}\limits_{{\rm{UUID}}}$$

Using these URLs, the business logic (as described in Layer 4) can effectively resolve the URLs and orchestrate the routing of trains between stations. This process is analogous to how requests are navigated on the Internet, starting from a higher level (the PHT ecosystem) and moving down to a more granular level (the individual stations). This hierarchical structure allows us to precisely locate each station, determine its association with a particular ecosystem, and dispatch the trains accordingly.

After establishing a method to identify each station through its URL, our next step involves synchronizing the internal workflows of each station type, whether it is a PADME or PHT-meDIC station. In our study, this specifically entails the alignment of the security protocols used by each station, which is part of the next section.

Layer 2 – Harmonizing the security protocols

Conceptualization

The next aspect that we need to address is the conceptualization of an overarching security concept, coupled with the alignment of the PADME and PHT-meDIC security protocols. One crucial challenge is that each infrastructure adheres to its own protocols and workflows with respect to encryption or the signing of digital assets. To overcome this, we propose adopting modular software containers, a methodology suggested by Hasselbring et al.24, to enhance software and workflow portability. Consequently, our strategy involves the containerization of all security-related processes of each ecosystem’s workflows to enable interoperability24. With this strategy, we achieve the flexibility required to implement these workflows across various ecosystems, while also ensuring the seamless distribution of protocol updates without substantial modifications in other ecosystems. The extracted containerized workflow can serve as a supplemental service, accessible for other PHT ecosystems, allowing different infrastructures to integrate this service into their own workflows. Alternatively, a comparable and probably less resource-intensive approach to distributing security protocols in a container could involve structuring them in a library-like structure that is installed in each involved infrastructure. All in all, the overarching protocol requires the containerized process steps to be arranged in sequence (see Fig. 4 as an example). The train undergoes decryption (pre-run) or encryption (post-run) incrementally, with each containerized module being invoked in succession to perform the necessary operations. The practice of nesting various workflows has the additional advantage that it can be applied multiple times, especially when more than two infrastructures are involved in the process. Having this concept in mind, we argue that the concept of this overarching security protocol, potentially comprising several sub-protocols, adheres to the established security policies of each PHT infrastructure involved, due to the sequential execution of each sub-protocol. In the following, we provide one exemplary integration of a security protocol in the context of our PoC.

Interaction of the security concepts of the PADME and PHT-meDIC platforms. The overarching security concept for each infrastructure represents a combination of both. In our scenario, the PADME security protocol is applied on top of the PHT-meDIC protocol, when the train is at a PADME station. At a PHT-meDIC station, the order of the protocols would be flipped: first the PHT-meDIC, then the PADME protocol.

PoC implementation

In our PoC, the security and encryption protocols of the PHT-meDIC ecosystem have been modularized through containerization. The container code can be found in the supplementary materials. This module is then seamlessly integrated into the execution environment of the PADME station(s). Note that this modular approach is reciprocal; the PADME protocol can also be similarly containerized and integrated within the PHT-meDIC station. Conceptually and metaphorically speaking, we consider this module image as a supplementary train that can be pulled and placed next to the real train that arrives at a station. During train execution, both security protocols are executed in sequence while preserving the integrity of the original system’s processes. The operation of this series of security protocols, as exemplified by our two protocols, is shown in Fig. 4 and works as follows:

-

Train arrival (Beginning of process): Upon arrival at a PADME station, the train was secured with dual encryption: The external layer conforms to the PADME protocol, whereas the internal layer is encrypted following the PHT-meDIC protocol.

-

Pre-run protocol of PADME (Decryption – Outer layer): The initial step involves the PADME pre-run protocol decrypting the external encryption layer.

-

Pre-run protocol of PHT-meDIC (Decryption – Inner layer): After the decryption of the outer layer, the PHT-meDIC pre-run protocol decrypts the inner encryption layer, preparing the train for its execution.

-

Train execution: Upon successful validation, the train is executed by the station environment.

-

Post-run protocol of PHT-meDIC (Re-encryption – Inner layer): Post-execution, the train is re-encrypted, starting with the PHT-meDIC post-run protocol addressing the inner layer.

-

Post-run protocol of PADME (Re-encryption – Outer layer): Following the inner layer’s re-encryption, the PADME post-run protocol re-encrypts the external layer.

-

Train departure (End of process): This marks the completion of the entire train lifecycle at a station. After this step, the train is dispatched to the next station on the route.

In Layers 1 and 2 described above, we implicitly required metadata for each dispatched train. This includes details such as the station’s URL for precise train routing and security-related items such as the public keys of each station for encryption, which are especially crucial in Layer 2. To integrate these pieces of information into a single entity, we propose the creation of a unified data exchange schema. This schema will be instrumental in the decryption and encryption processes of the stations, as well as in directing the train on a given route. The formulation of this schema will be discussed below.

Layer 3 – Common metadata exchange schema

Conceptualization

The implementation of a modular security protocol (Layer 2) also requires the presence of metadata (e.g., public keys, hashes, or signatures), which plays a crucial role in ensuring proper functionality. Consequently, based on the initial two layers outlined, we identify an additional requirement: the establishment of a standardized metadata schema. As already pointed out by Lamprecht et al., software metadata is a necessity for (semantic) interoperability18. This corresponds to the definition of Benson et al., which characterize semantic interoperability as the capability ‘for computers to share, understand, interpret and use data without ambiguity’19. Hence, our aim is to develop a standardized set of metadata elements that can be used and exchanged in diverse workflows, ensuring that ‘both the sender and the recipient have data that means exactly the same thing’19. We aim for a solution that can be effortlessly expanded to incorporate security-related and infrastructure-specific requirements. We divide the metadata set into two categories:

-

Business Logic Metadata: This information is used by the business logic workflow, enabling tasks such as the routing of trains between stations. Within Layer 1, we have initiated the disambiguation of the stations by globally identifying them and incorporating them into the business logic metadata.

-

Security Protocol Metadata: This category contains essential elements of modern security protocols and (asymmetric) encryption systems, such as public keys, hashes, and signatures that enable secure communication and data transmission by ensuring confidentiality, integrity, and authenticity39. The information provided can be utilized by the infrastructures to either apply external security protocols (as described in Layer 2) or their own protocols.

At this point, the question of the location of metadata storage for subsequent processing arises. Given the various possible approaches, such as centralized or decentralized storage, we require that the set of metadata is attached to the train and that each infrastructure handles it for internal business logic. This aligns with the train definitions proposed by Bonino et al., where trains are decomposed into metadata and the payload that contains the analysis27. Attaching metadata to the train offers the benefit of eliminating the need to query this information from external services. This approach is also autonomous and does not rely on any central authority, which ensures that the information is readily available wherever it is required.

PoC implementation

According to our conceptualization, we use a small selection of metadata items as depicted in Fig. 5. This schema covers business-related information concerning the train’s origin (e.g., the source repository), its creator, and the identifiers of the stations to be visited. Note that these (basic) items share similarities with the definitions outlined by Bonino et al.27. Specifically, the route array captures essential station-related information and the station identifiers that have been introduced above in Layer 1. As each route item has a specific index the route can be systematically executed step by step. Additionally, the metadata includes the public keys of all participating entities, guaranteeing that assets can be encrypted in a manner that only the designated recipient can decrypt them. This is achieved in collaboration with the security protocols outlined in Layer 2, which process these keys. In terms of the integrity of the train contents, we also utilize signatures and hashes. In our particular case, each station’s public key is obtained from the SR. The installation and registration process of a station in the SR necessitates the provision of its public key, which is then retrieved from the SR during the route selection. Before the train is dispatched, the metadata is attached to the train.

Once we have established an identifier mechanism (see Layer 1), aligned the security protocols involved (see Layer 2), and laid the groundwork for a common metadata exchange schema (see Layer 3), the next step is to facilitate actual technical interoperability. This implies that we leverage the work established in the previous layers to facilitate the exchange of actual trains between the two ecosystems, PADME and PHT-meDIC. In the following section, we will introduce a routine that outlines the business logic required for this process.

Layer 4 – Overarching business logic

Conceptualization

The objective of this layer is to perform the transfer of the analysis from one ecosystem to another. As the metadata attached to the train entails the concrete route information, the business logic is able to transfer the digital assets representing the train to a given destination. The destination endpoint can be a container repository, identified by a URL, to which the train can be sent for further processing. There might be several approaches and techniques to transfer and transform a train from one ecosystem to another. In the following, we briefly present our overarching business logic used to enable technical interoperability between PHT-meDIC and PADME.

PoC implementation

We refer to Fig. 3 and Fig. 6 as a graphical representation of our overarching business logic to transfer (container) trains between ecosystems. From a top-level perspective (see Fig. 3), our business logic is modeled after the real-world practice of reloading cargo from one train to another. The encrypted contents (the cargo) of the train, such as code and files, are unloaded from the arriving, external train and loaded into a new train that is compatible with the ecosystem.

From a technical perspective and to streamline the transfer of trains between ecosystems, we established a dedicated input repository (the ‘transfer station’) within each central service component. This repository is the designated location to which external trains should be pushed. A webhook monitors this repository, identifies incoming trains, and initiates the infrastructure-specific business logic to direct the train to its intended station after the train contents have been reloaded. This portal-inspired design decision has the advantage of keeping the actual stations private within the infrastructure, with only one repository accessible by external services or infrastructures. To send a train on its track, the entity requesting the train can select a route that utilizes information from the SR, potentially including stations within the same infrastructure, as well as those that connect different infrastructures. If the next station is assigned to the current infrastructure, the standard business logic proceeds to dispatch the train. In the other case, when the next station is assigned to another infrastructure, the business logic needs to proceed differently: As shown in Fig. 3 and Fig. 6, the business logic of the sending infrastructure detects the external station based on the metadata and transfers the train to the dedicated repository of the receiving infrastructure using a ‘push’ command (when using Container Trains). Subsequently, the receiving infrastructure’s business logic handles the train and prepares/reloads it for the next station along the route. The station receives the train and decrypts the envelope encryption using the modularized security protocol that has been introduced in Layer 2. After the execution of the train, it is re-encrypted using the envelope encryption and pushed back to the central service. Depending on the route, the train either remains in the infrastructure and is made available for the next station or is forwarded to the other infrastructure, following the same principle as described above. This cycle continues until all stations along the route have been processed.

Up to now, we have enabled the interoperability of the two involved ecosystems. Hereafter, we describe the evaluation of our PoC in the real-world setting of a research project.

Conducting cross-infrastructural analysis

Upon completion of our PoC development, we validate our approach within a real-world scenario and real patient data. For our interoperability study, we have opted to conduct a basic statistical analysis of the leukodystrophy data at hand. Since our concept is based on Container Trains, we argue that other trains with various analytical functions will also operate successfully within our PoC. The process of our evaluation is shown in Fig. 7.

Validation process for the PoC. Step 1 encompasses the deployment of the PoC (Layer 1–4). In Step 2, we set up the data provision using REDCap. Step 3 involves an initial test run to ensure operational functionality. Step 4 is dedicated to the development of the ‘Data Discovery’ train that performs the analysis. The final Step 5 includes executing the analysis, extracting results, and benchmarking our PoC to acquire quantitative performance metrics for our interoperability concept.

After we have deployed our described components in the previous section and in Fig. 6 (Step 1), we set up a station at each of the three sites – Aachen, Tübingen, and Leipzig – and ensure that the REDCap databases at these locations are accessible via these stations (Step 2). After passing the functional tests (Step 3), our infrastructure is now operational. With access to the data, we can proceed with data analysis. The train design is part of the next section (Step 4).

Designing the ‘data discovery’-train

The purpose of this study ‘Data Discovery’ is to initially inspect the data provided by the DICs, which, for example, can 1) support the identification of analogous studies that can be used as a reference point, 2) provide insights into the data quality, or 3) can be used as starting point for the design of a more sophisticated follow-up data analysis study. Since our work focuses on Container Trains, we create the train for our data analysis using Python (Version 3.10 – https://peps.python.org/pep-0619/) and Docker (https://www.docker.com) as containerization technology. The train consists of two steps. The initial step involves loading the REDCap data into the train, while the subsequent step involves the core data processing unit responsible for generating statistics and producing a PDF report for researchers. To streamline the data querying process, we have developed a custom data importer routine. This routine retrieves the data via direct access to the REDCap database, using the necessary credentials entered by the station administrator before initiating the train execution. For the creation of the report representing the analysis results, we gather several statistics. First, we determine the total number of male and female patients at each station (Table 1). Furthermore, we determine the age distribution at each station according to a k-anonymity level of 5 (Fig. 8)40. Lastly, we create plots showing the counts of the Baseline, Examination, and Genetic questionnaires (Fig. 9).

The count of patients across the three sections: Baseline, Examination and Genetics30.

Execution and results of the analysis

After creating the Container Train, we executed the train (Step 5) according to our workflow depicted in Fig. 6. In summary, Figs. 8 and 9 provide an overview of the data underlying each station, providing information, such as the age distributions or the number of the Baseline, Examination, and Genetics sections in the questionnaire. The investigation of the age distribution, as shown in Fig. 8, indicates that in Tübingen, there is a balanced representation of male and female patients, most under the age of 20 years. This demographic trend is probably a result of the focus of the medical center on the treatment of leukodystrophies in children. On the contrary, Leipzig has a higher proportion of male patients and the majority of them are adults. Lastly, Aachen and Leipzig contribute more male patients than female (Table 1). Importantly, the dataset from Aachen is missing genetic data, as shown in Fig. 9, in contrast to the complete sets of questionnaires available from Leipzig and Tübingen. Measuring the impact of our interoperability concept in terms of the size of the dataset (number of patients), we come to the following conclusion. In cases where only one infrastructure is used, we either have 485 patients (Aachen + Leipzig) or 216 (Tübingen). However, through the interoperability of both ecosystems, we have achieved a total patient count of 701 (Aachen + Leipzig + Tübingen). These results highlight the success of our approach, enabling us to expand the total dataset and enhance our data-sharing capabilities.