The two-layer training

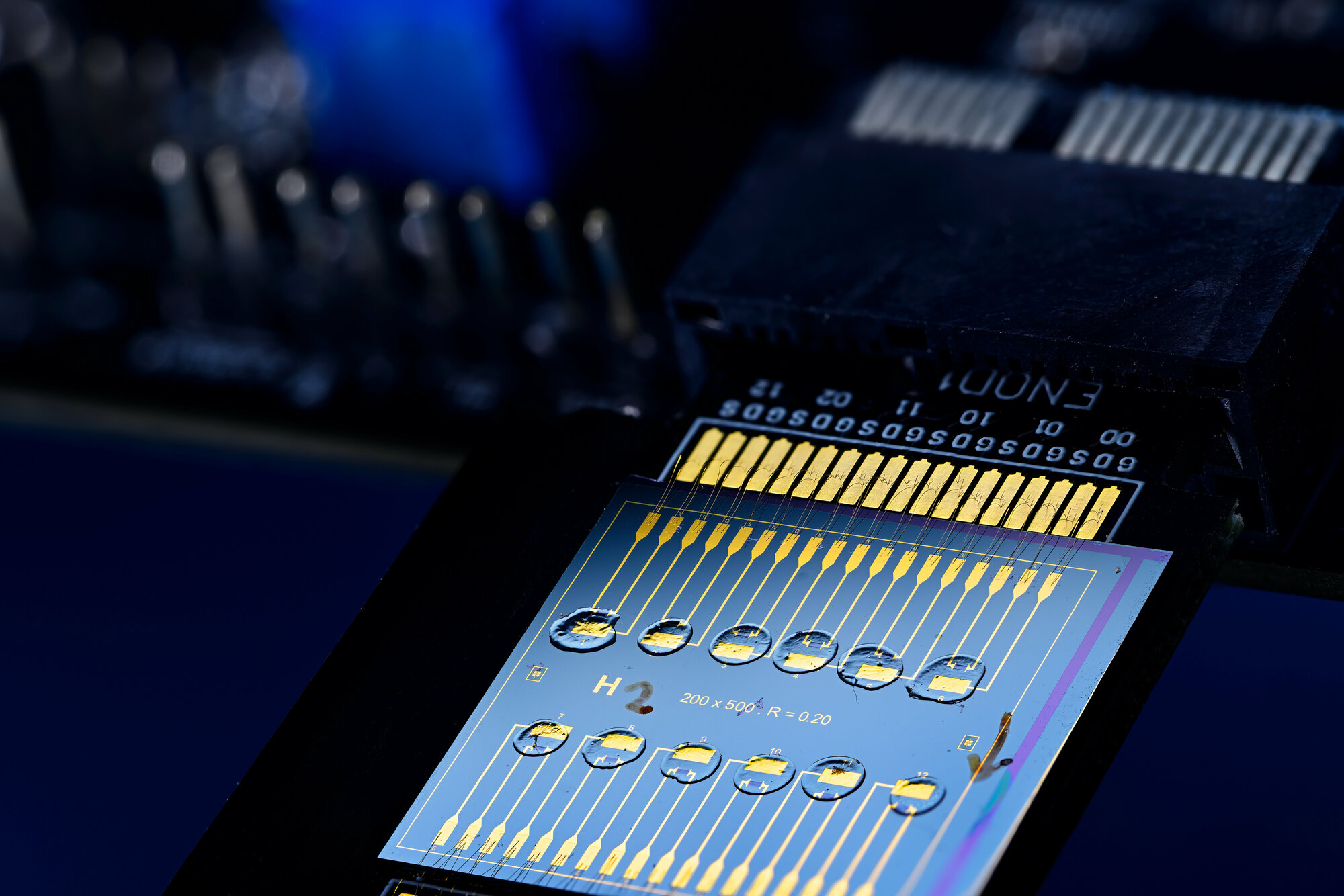

For the researchers, the main challenge was to integrate the key components needed for on-chip training on a single neuromorphic chip. “A major task to solve was the inclusion of the electrochemical random-access memory (EC-RAM) components for example,” says Van de Burgt. “These are the components that mimic the electrical charge storing and firing attributed to neurons in the brain.”

The researchers fabricated a two-layer neural network based on EC-RAM components made from organic materials and tested the hardware with an evolution of the widely used training algorithm backpropagation with gradient descent. “The conventional algorithm is frequently used to improve the accuracy of neural networks, but this is not compatible with our hardware, so we came up with our own version,” says Stevens.

What’s more, with AI in many fields quickly becoming an unsustainable drain of energy resources, the opportunity to train neural networks on hardware components for a fraction of the energy cost is a tempting possibility for many applications – ranging from ChatGPT to weather forecasting.

The future need

While the researchers have demonstrated that the new training approach works, the next logical step is to go bigger, bolder, and better.

“We have shown that this works for a small two-layer network,” says van de Burgt. “Next, we’d like to involve industry and other big research labs so that we can build much larger networks of hardware devices and test them with real-life data problems.”

This next step would allow the researchers to demonstrate that these systems are very efficient in training, as well as running useful neural networks and AI systems. “We’d like to apply this technology in several practical cases,” says Van de Burgt. “My dream is for such technologies to become the norm in AI applications in the future.”

Full paper details

“Hardware implementation of backpropagation using progressive gradient descent for in situ training of multilayer neural networks“, Eveline R. W. van Doremaele, Tim Stevens, Stijn Ringeling, Simone Spolaor, Marco Fattori, and Yoeri van de Burgt, Science Advances, (2024).

Eveline R. W. van Doremaele and Tim Stevens contributed equally to the research and are both considered as first authors of the paper.

Tim Stevens is currently working as a mechanical engineer at MicroAlign, a company co-founded by Marco Fattori.